从ASM 11.2开始,ASM spfile可以被存储在ASM磁盘组中。的确,在新ASM安装时,OUI会将ASM spfile存储在磁盘组中。这对于Oracle Restart(单实例环境)与RAC环境是一样的。在安装时第一个创建的磁盘组就是存储spfile的缺省位置,但这不是必须的。spfile仍然可以存储在文件系统中,比如$ORACLE_HOME/dbs目录。

ASMCMD命令的新功能

为了支持这个功能,ASMCMD增加了新的命令来备份,复制与迁移ASM spfile。这些命令是:

.spbackup:将一个ASM spfile备份到一个备份文件中。这个备份文件不是一种特定文件类型并且不会被标识为一个spfile。

.spcopy:将一个ASM spfile文件从原目录复制到目标目录中

.spmove:将一个ASM spfile文件从原目录迁移到目标目录中并且自动更新GPnP profile。

SQL命令create pfile from spfile与create spfile from pfile对于存储在磁盘组中的ASM spfile仍然有效。

存储在磁盘组中的ASM spfile

在我的环境中,ASM spfile存储在磁盘组crsdg中

[grid@jyrac1 trace]$ asmcmd find --type ASMPARAMETERFILE +CRSDG "*" +CRSDG/jyrac-cluster/asmparameterfile/REGISTRY.253.928747387

从上面的结果可以看到,ASM spfile存储在特定的目录中,并且它的ASM文件号为253,ASM spfile以一个注册文件存储在磁盘组中,并且它的ASM元数据文件号总是253

可以使用sqlplus来查看

SQL> show parameter spfile

NAME TYPE VALUE

------------------------------------ ---------------------- ------------------------------

spfile string +CRSDG/jyrac-cluster/asmparame

terfile/REGISTRY.253.928747387

备份ASM spfile文件

[grid@jyrac1 trace]$ asmcmd spbackup +CRSDG/jyrac-cluster/asmparameterfile/REGISTRY.253.928747387 /home/grid/asmspfile.backup

查看备份ASM spfile文件的内容

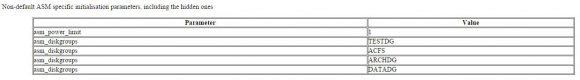

[grid@jyrac1 ~]$ strings asmspfile.backup +ASM1.__oracle_base='/u01/app/grid'#ORACLE_BASE set from in memory value +ASM2.asm_diskgroups='ARCHDG','DATADG'#Manual Dismount +ASM1.asm_diskgroups='ARCHDG','DATADG','ACFS'#Manual Mount *.asm_power_limit=1 *.diagnostic_dest='/u01/app/grid' *.instance_type='asm' *.large_pool_size=12M *.remote_login_passwordfile='EXCLUSIVE'

可以看到这是ASM spfile的一个副本,它包含了参数与相关注释

ASM spfile查找顺序

因为ASM实例在启动时需要读取spfile文件,如果spfile所在磁盘组不能mount,那么ASM不仅不知道spfile存储在那个磁盘组,而且也不知道ASM spfile查找字符串的值。当Oracle ASM实例搜索一个初始化参数文件时,它的搜索顺序为:

1.在Grid Plug and Play(GPnp) profile中指定的初始化参数文件目录

2.如果在GPnP profile中没有指定,那么搜索顺序将变为:

2.1.Oracle ASM实例home目录中的spfile(比如:$ORACLE_HOME/dbs/spfile+ASM.ora)

2.2.Oracle ASM实例home目录中的pfile

这里并没有告诉关于ASM查找顺序字符串的任何信息,但至少告诉我们了spfile与GPnp profile。下面是来自Exadata环境中的值:

[root@jyrac2 ~]# find / -name profile.xml

/u01/app/product/11.2.0/crs/gpnp/jyrac2/profiles/peer/profile.xml

/u01/app/product/11.2.0/crs/gpnp/profiles/peer/profile.xml

[grid@jyrac2 peer]$ gpnptool getpval -p=profile.xml -?

Oracle GPnP Tool

getpval Get value(s) from GPnP Profile

Usage:

"gpnptool getpval ", where switches are:

-prf Profile Tag: , optional

-[id:]prf_cn Profile Tag: , optional

-[id:]prf_pa Profile Tag: , optional

-[id:]prf_sq Profile Tag: , optional

-[id:]prf_cid Profile Tag: , optional

-[pid:]nets Profile Tag: children of , optional

-[pid:]haip Profile Tag: children of , optional

-[id:]haip_ma Profile Tag: , optional

-[id:]haip_bm Profile Tag: , optional

-[id:]haip_s Profile Tag: , optional

-[pid:]hnet Profile Tag: children of , optional

-[id:]hnet_nm Profile Tag: , optional

-[pid:]net Profile Tag: children of , optional

-[id:]net_ip Profile Tag: , optional

-[id:]net_use Profile Tag: , optional

-[id:]net_nt Profile Tag: , optional

-[id:]net_aip Profile Tag: , optional

-[id:]net_ada Profile Tag: , optional

-[pid:]asm Profile Tag: children of , optional

-[id:]asm_dis Profile Tag: , optional

-[id:]asm_spf Profile Tag: , optional

-[id:]asm_uid Profile Tag: , optional

-[pid:]css Profile Tag: children of , optional

-[id:]css_dis Profile Tag: , optional

-[id:]css_ld Profile Tag: , optional

-[id:]css_cin Profile Tag: , optional

-[id:]css_cuv Profile Tag: , optional

-[pid:]ocr Profile Tag: children of , optional

-[id:]ocr_oid Profile Tag: , optional

-rmws Remove whitespace from xml, optional

-fmt[=0,2] Format profile. Value is ident level,step, optional

-p[=profile.xml] GPnP profile name

-o[=gpnptool.out] Output result to a file, optional

-o- Output result to stdout

-ovr Overwrite output file, if exists, optional

-t[=3] Trace level (min..max=0..7), optional

-f= Command file name, optional

-? Print verb help and exit

[grid@jyrac2 peer]$ gpnptool getpval -p=profile.xml -asm_dis -o-

[grid@jyrac2 peer]$ gpnptool getpval -p=profile.xml -asm_spf -o-

+CRSDG/jyrac-cluster/asmparameterfile/spfileasm.ora

在单实例环境中没有GPnP profile,因此为了支持在磁盘组中存储ASM spfile

[grid@jyrac1 ~]$ crsctl stat res ora.asm -p | egrep "ASM_DISKSTRING|SPFILE" ASM_DISKSTRING= SPFILE=+DATA/ASM/ASMPARAMETERFILE/registry.253.822856169

现在知道ASM在什么目录查找ASM磁盘与spfile。但磁盘组不能被mount,ASM实例没有启动时,ASM如何读取spfile呢,答案就在ASM磁盘头中。为了支持在磁盘组中存储ASM spfile,在ASM磁盘头中增加了两个字段:

.kfdhdb.spfile:ASM spfile的AU号

.kfdhdb.spfflg:ASM spfile标记,如果为1,ASM spfile将存储在kfdhdb.spfile所指示的AU中。

作为磁盘发现操作的一部分,ASM实例将读取磁盘头并查找spfile信息。一旦它查找到磁盘存储了spfile,它将可以读取真实的初始化参数。

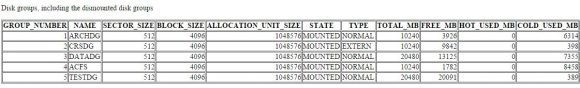

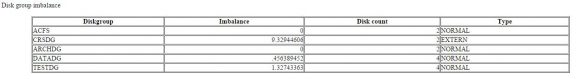

下面先来检查我环境中的磁盘组CRSDG的状态与冗余类型

[grid@jyrac1 ~]$ asmcmd lsdg -g CRSDG | cut -c1-26

Inst_ID State Type

1 MOUNTED EXTERN

2 MOUNTED EXTERN

磁盘组CRSDG被mount并且磁盘组为外部冗余。这意味着ASM spfile不会有镜像副本,因此我们只能看到一个磁盘有kfdhdb.spfile与fkdhdb.spfflg字段。例如:

[grid@jyrac1 ~]$ asmcmd lsdsk -G CRSDG --suppressheader

/dev/raw/raw1

/dev/raw/raw8

[grid@jyrac1 ~]$ kfed read /dev/raw/raw1 | grep spf

kfdhdb.spfile: 0 ; 0x0f4: 0x00000000

kfdhdb.spfflg: 0 ; 0x0f8: 0x00000000

[grid@jyrac1 ~]$ kfed read /dev/raw/raw8 | grep spf

kfdhdb.spfile: 30 ; 0x0f4: 0x0000001e

kfdhdb.spfflg: 1 ; 0x0f8: 0x00000001

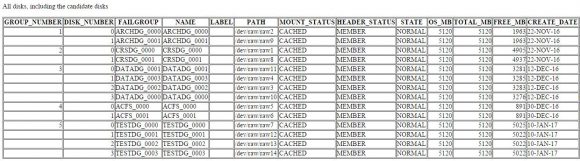

SQL> select group_number,disk_number, name,path from v$asm_disk where group_number=2;

GROUP_NUMBER DISK_NUMBER NAME PATH

------------ ----------- ------------------------------------------------------------ --------------------------------------------------

2 1 CRSDG_0001 /dev/raw/raw8

2 0 CRSDG_0000 /dev/raw/raw1

可以看到只有一个磁盘上存储了ASM spfile文件

使用kfed工具来查看磁盘/dev/raw/raw8上的30号AU所存储的内容

[grid@jyrac1 ~]$ dd if=/dev/raw/raw8 bs=1048576 skip=30 count=1 | strings +ASM1.__oracle_base='/u01/app/grid'#ORACLE_BASE set from in memory value +ASM2.asm_diskgroups='ARCHDG','DATADG'#Manual Dismount +ASM1.asm_diskgroups='ARCHDG','DATADG','ACFS'#Manual Mount *.asm_power_limit=1 *.diagnostic_dest='/u01/app/grid' *.instance_type='asm' *.large_pool_size=12M *.remote_login_passwordfile='EXCLUSIVE' 1+0 records in 1+0 records out 1048576 bytes (1.0 MB) copied, 0.035288 seconds, 29.7 MB/s KeMQ jyrac-cluster/asmparameterfile/spfileasm.ora

磁盘/dev/raw/raw8上的30号AU的确是存储的ASM spfile内容

ASM spfile别名块

新的ASM磁盘头有一个额外的字段,它的元数据块类型为KFBTYP_ASMSPFALS,它用来描述ASM spfile别名。ASM spfile别名存储在ASM spfile所在AU的最后一个块中。下面来查看磁盘/dev/raw/raw8上的30号AU的最一个块255:

[grid@jyrac1 ~]$ kfed read /dev/raw/raw8 aun=30 blkn=255 kfbh.endian: 1 ; 0x000: 0x01 kfbh.hard: 130 ; 0x001: 0x82 kfbh.type: 27 ; 0x002: KFBTYP_ASMSPFALS kfbh.datfmt: 1 ; 0x003: 0x01 kfbh.block.blk: 255 ; 0x004: blk=255 kfbh.block.obj: 253 ; 0x008: file=253 kfbh.check: 1364026699 ; 0x00c: 0x514d654b kfbh.fcn.base: 0 ; 0x010: 0x00000000 kfbh.fcn.wrap: 0 ; 0x014: 0x00000000 kfbh.spare1: 0 ; 0x018: 0x00000000 kfbh.spare2: 0 ; 0x01c: 0x00000000 kfspbals.incarn: 928747387 ; 0x000: 0x375b8f7b kfspbals.blksz: 512 ; 0x004: 0x00000200 kfspbals.size: 3 ; 0x008: 0x0003 kfspbals.path.len: 44 ; 0x00a: 0x002c kfspbals.path.buf: ; 0x00c: length=0

这个元数据块不大,大多数的条目都是块头信息(字段kfbh.*)。实际上ASM spfile别名数据(字段kfspbals.*)只有几个条目。spfile文件的incarnation为928747387是文件名(REGISTRY.253.928747387)的一部分,ASM spfile的块大小512 bytes并且文件大小为3个块。path信息为空,意味着没有真实的ASM spfile别名。

下面将创建ASM spfile别名,先使用现有的spfile来创建pfile,再使用pfile来创建spfile别名:

[grid@jyrac1 ~]$sqlplus / as sysasm SQL> create pfile='/tmp/pfile+ASM.ora' from spfile; File created. SQL> shutdown abort; ASM instance shutdown SQL> startup pfile='/tmp/pfile+ASM.ora'; ASM instance started Total System Global Area 1135747072 bytes Fixed Size 2297344 bytes Variable Size 1108283904 bytes ASM Cache 25165824 bytes ASM diskgroups mounted SQL> create spfile='+CRSDG/jyrac-cluster/asmparameterfile/spfileasm.ora' from pfile='/tmp/pfile+ASM.ora'; File created. SQL> exit

再次使用asmcmd查看ASM spfile将会发现存在两个条目

[grid@jyrac1 trace]$ asmcmd find --type ASMPARAMETERFILE +CRSDG "*" +CRSDG/jyrac-cluster/asmparameterfile/REGISTRY.253.928747387 +CRSDG/jyrac-cluster/asmparameterfile/spfileasm.ora

现在可以看到ASM spfile本身(REGISTRY.253.928747387)与它的别名或链接文件(spfileasm.ora)。查看spfileasm.ora可以看到它确实是注册文件(REGISTRY.253.928747387)的别名

[grid@jyrac1 ~]$ asmcmd ls -l +CRSDG/jyrac-cluster/asmparameterfile/

Type Redund Striped Time Sys Name

ASMPARAMETERFILE UNPROT COARSE JAN 12 16:00:00 Y REGISTRY.253.928745345

N spfileasm.ora => +CRSDG/jyrac-cluster/asmparameterfile/REGISTRY.253.928745345

下面再次查看磁盘/dev/raw/raw8上的30号AU的最一个块255:

[grid@jyrac1 ~]$ kfed read /dev/raw/raw8 aun=30 blkn=255 kfbh.endian: 1 ; 0x000: 0x01 kfbh.hard: 130 ; 0x001: 0x82 kfbh.type: 27 ; 0x002: KFBTYP_ASMSPFALS kfbh.datfmt: 1 ; 0x003: 0x01 kfbh.block.blk: 255 ; 0x004: blk=255 kfbh.block.obj: 253 ; 0x008: file=253 kfbh.check: 1364026699 ; 0x00c: 0x514d654b kfbh.fcn.base: 0 ; 0x010: 0x00000000 kfbh.fcn.wrap: 0 ; 0x014: 0x00000000 kfbh.spare1: 0 ; 0x018: 0x00000000 kfbh.spare2: 0 ; 0x01c: 0x00000000 kfspbals.incarn: 928745345 ; 0x000: 0x375b8f7b kfspbals.blksz: 512 ; 0x004: 0x00000200 kfspbals.size: 3 ; 0x008: 0x0003 kfspbals.path.len: 44 ; 0x00a: 0x002c kfspbals.path.buf:jyrac-cluster/asmparameterfile/spfileasm.ora ; 0x00c: length=44

现在可以看到别名文件名出现在ASM spfile别名块中。并且出现了新的incarnation号来表示新的ASM spfile文件的创建时间。

小结:

从ASM 11.2开始,ASM spfile可以被存储在ASM磁盘组中。为了支持这个功能,ASMCMD增加了相关命令来进行管理,并且在ASM磁盘头中增加了新的ASM元数据结构。