朋友反馈他们单位Oracle RAC中的一个节点异常关闭了,操作环境是IBM小机720,EMC存储,Oracle是11.2.0.4。收集了一些信息给我,查看该节点实例的alert.log文件发现了如下信息:

Thu Mar 31 14:29:18 2016 NOTE:Waiting for all pending writes to complete before de-registering: grpnum 3 Thu Mar 31 14:29:20 2016 WARNING: group 3 dismounted: failed to write virtual extent 263 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 263 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 265 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 246 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 260 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 261 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 244 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 257 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 259 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 254 of file 264 WARNING: group 3 dismounted: failed to write virtual extent 254 of file 264 Thu Mar 31 14:29:21 2016 WARNING: requested mirror side 1 of virtual extent 0 logical extent 0 offset 16384 is not allocated; I/O request failed WARNING: requested mirror side 2 of virtual extent 0 logical extent 1 offset 16384 is not allocated; I/O request failed WARNING: requested mirror side 3 of virtual extent 0 logical extent 2 offset 16384 is not allocated; I/O request failed Errors in file /opt/app/oracle/diag/rdbms/jtpsoft/jtpsoft1/trace/jtpsoft1_arc1_10551306.trc: ORA-00202: control file: '+GJJ_DG/jtpsoft/controlfile/current.260.841581789' ORA-15079: ASM file is closed ORA-15079: ASM file is closed ORA-15079: ASM file is closed System state dump requested by (instance=1, osid=15007960 (CKPT)), summary=[abnormal instance termination]. System State dumped to trace file /opt/app/oracle/diag/rdbms/jtpsoft/jtpsoft1/trace/jtpsoft1_diag_4784254_20160331142927.trc Thu Mar 31 14:29:28 2016 ORA-1092 : opitsk aborting process Thu Mar 31 14:29:34 2016 ORA-1092 : opitsk aborting process Thu Mar 31 14:29:34 2016 License high water mark = 181 Thu Mar 31 14:29:37 2016 Instance terminated by CKPT, pid = 15007960 USER (ospid: 15073490): terminating the instance Instance terminated by USER, pid = 15073490

从上面信息可以看到磁盘组3(也就是磁盘组+GJJ_DG)已经dismounted了并且ckpt进程终止了实例。如是查看跟踪文件/opt/app/oracle/diag/rdbms/jtpsoft/jtpsoft1/trace/jtpsoft1_arc1_10551306.trc,可以看到如下内容:

Trace file /opt/app/oracle/diag/rdbms/jtpsoft/jtpsoft1/trace/jtpsoft1_arc1_10551306.trc

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

ORACLE_HOME = /opt/app/oracle/product/11.2.0/db_1

System name: AIX

Node name: p720a

Release: 1

Version: 6

Machine: 00C855104C00

Instance name: jtpsoft1

Redo thread mounted by this instance: 1

Oracle process number: 41

Unix process pid: 10551306, image: oracle@p720a (ARC1)

*** 2016-03-31 14:29:21.527

*** SESSION ID:(641.1) 2016-03-31 14:29:21.527

*** CLIENT ID:() 2016-03-31 14:29:21.527

*** SERVICE NAME:(SYS$BACKGROUND) 2016-03-31 14:29:21.527

*** MODULE NAME:() 2016-03-31 14:29:21.527

*** ACTION NAME:() 2016-03-31 14:29:21.527

*** TRACE FILE RECREATED AFTER BEING REMOVED ***

WARNING:failed xlate 2

WARNING: requested mirror side 1 of virtual extent 0 logical extent 0 offset 16384 is not allocated; I/O request failed

WARNING:failed xlate 2

WARNING: requested mirror side 2 of virtual extent 0 logical extent 1 offset 16384 is not allocated; I/O request failed

WARNING:failed xlate 2

WARNING: requested mirror side 3 of virtual extent 0 logical extent 2 offset 16384 is not allocated; I/O request failed

DDE rules only execution for: ORA 202

----- START Event Driven Actions Dump ----

---- END Event Driven Actions Dump ----

----- START DDE Actions Dump -----

Executing SYNC actions

----- START DDE Action: 'DB_STRUCTURE_INTEGRITY_CHECK' (Async) -----

Successfully dispatched

----- END DDE Action: 'DB_STRUCTURE_INTEGRITY_CHECK' (SUCCESS, 0 csec) -----

Executing ASYNC actions

----- END DDE Actions Dump (total 0 csec) -----

*** 2016-03-31 14:29:21.533

dbkedDefDump(): Starting a non-incident diagnostic dump (flags=0x0, level=1, mask=0x0)

----- Error Stack Dump -----

ORA-00202: control file: '+GJJ_DG/jtpsoft/controlfile/current.260.841581789'

ORA-15079: ASM file is closed

ORA-15079: ASM file is closed

ORA-15079: ASM file is closed

----- SQL Statement (None) -----

Current SQL information unavailable - no cursor.

----- Call Stack Trace -----

calling call entry argument values in hex

location type point (? means dubious value)

-------------------- -------- -------------------- ----------------------------

skdstdst()+40 bl 0000000109B3BDC0 FFFFFFFFFFF6D60 ? 000000001 ?

000000001 ? 000000000 ?

000000000 ? 000000001 ?

000000001 ? 000000000 ?

ksedst1()+112 call skdstdst() FFFFFFFFFFF6E38 ? 000002004 ?

110720F48 ? 10A6D6A74 ?

10A6D5F50 ? FFFFFFFFFFF7190 ?

FFFFFFFFFFF6F40 ?

2050033FFFF6E18 ?

ksedst()+40 call ksedst1() 10A6D6A68 ? 7000000000291 ?

10A6D6A3C ? B000000000000 ?

10A6D5F50 ? 000000000 ?

400000000 ? 1D25C0C474A0C ?

dbkedDefDump()+1516 call ksedst() 000000001 ? FFFFFFFFFFF72F0 ?

000000001 ? 000000000 ?

000000003 ? 00001ED50 ?

000000000 ? 000000000 ?

ksedmp()+72 call dbkedDefDump() 1A006FE88 ? 9001000A0000E00 ?

FFFFFFFFFFF7CD0 ? 004C3009B ?

000000000 ? 000000001 ?

000000001 ? 0000000CC ?

kcccsi()+416 call ksedmp() CA000000CA ? 000000001 ?

000000031 ? 7000104BCDE1D56 ?

000000000 ? 7000104C8CC4478 ?

000000006 ? 000000030 ?

kccrhd()+1488 call kcccsi() 000000020 ? 000000000 ?

110738B50 ? 10A344FE0 ?

FFFFFFFFFFF7FA0 ? 004C3009B ?

102D0B800 ? 11298BF30 ?

kccgft_refresh_hdr( call kccrhd() FFFFFFFFFFF8100 ? 004C3009B ?

)+124 11297B0B0 ? FFFFFFFFFFFA5E0 ?

6B736C636D617266 ?

112939850 ? 10A38F248 ?

10A38F258 ?

kcc_noenq_refresh() call kccgft_refresh_hdr( 110E9DA20 ? 000000004 ?

+40 ) 000000018 ? 11012D7C8 ?

000000021 ? 00000374E ?

000000001 ? FFFFFFFFFFF81F0 ?

kccocx_noenq()+292 call kcc_noenq_refresh() 20B6CECD0 ? 000000102 ?

000000000 ? 000000000 ?

FFFFFFFFFFF8070 ?

44244288000537D0 ?

9000000000051AC ?

FFFFFFFFFFF8030 ?

...省略...

上面的内容也就是不能对磁盘组执行IO操作,并没有得到更有用的信息。如是查看asm的alert.log文件,可以看到如下信息:

Thu Mar 31 14:29:18 2016 WARNING: Waited 15 secs for write IO to PST disk 0 in group 3. WARNING: Waited 15 secs for write IO to PST disk 1 in group 3. WARNING: Waited 15 secs for write IO to PST disk 2 in group 3. WARNING: Waited 15 secs for write IO to PST disk 0 in group 3. WARNING: Waited 15 secs for write IO to PST disk 1 in group 3. WARNING: Waited 15 secs for write IO to PST disk 2 in group 3. Thu Mar 31 14:29:18 2016 NOTE: process _b000_+asm1 (22544528) initiating offline of disk 0.3043853018 (GJJ_DG_0000) with mask 0x7e in group 3 NOTE: process _b000_+asm1 (22544528) initiating offline of disk 1.3043853019 (GJJ_DG_0001) with mask 0x7e in group 3 NOTE: process _b000_+asm1 (22544528) initiating offline of disk 2.3043853020 (GJJ_DG_0002) with mask 0x7e in group 3 NOTE: checking PST: grp = 3 GMON checking disk modes for group 3 at 10 for pid 30, osid 22544528 ERROR: no read quorum in group: required 2, found 0 disks NOTE: checking PST for grp 3 done. NOTE: initiating PST update: grp = 3, dsk = 0/0xb56d82da, mask = 0x6a, op = clear NOTE: initiating PST update: grp = 3, dsk = 1/0xb56d82db, mask = 0x6a, op = clear NOTE: initiating PST update: grp = 3, dsk = 2/0xb56d82dc, mask = 0x6a, op = clear GMON updating disk modes for group 3 at 11 for pid 30, osid 22544528 ERROR: no read quorum in group: required 2, found 0 disks Thu Mar 31 14:29:18 2016 NOTE: cache dismounting (not clean) group 3/0x46ED7239 (GJJ_DG) NOTE: messaging CKPT to quiesce pins Unix process pid: 30277648, image: oracle@p720a (B001) Thu Mar 31 14:29:18 2016 NOTE: halting all I/Os to diskgroup 3 (GJJ_DG) Thu Mar 31 14:29:18 2016 NOTE: LGWR doing non-clean dismount of group 3 (GJJ_DG) NOTE: LGWR sync ABA=22.4306 last written ABA 22.4306 WARNING: Offline for disk GJJ_DG_0000 in mode 0x7f failed. WARNING: Offline for disk GJJ_DG_0001 in mode 0x7f failed. WARNING: Offline for disk GJJ_DG_0002 in mode 0x7f failed. Thu Mar 31 14:29:18 2016 kjbdomdet send to inst 2 detach from dom 3, sending detach message to inst 2 Thu Mar 31 14:29:18 2016 List of instances: 1 2 Dirty detach reconfiguration started (new ddet inc 1, cluster inc 20) Global Resource Directory partially frozen for dirty detach * dirty detach - domain 3 invalid = TRUE 305 GCS resources traversed, 0 cancelled Thu Mar 31 14:29:18 2016 ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Dirty Detach Reconfiguration complete Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "GJJ_DG" is being dismounted Thu Mar 31 14:29:18 2016 WARNING: dirty detached from domain 3 NOTE: cache dismounted group 3/0x46ED7239 (GJJ_DG) NOTE: cache deleting context for group GJJ_DG 3/0x46ed7239 SQL> alter diskgroup GJJ_DG dismount force /* ASM SERVER:1189966393 */ Thu Mar 31 14:29:18 2016 NOTE: No asm libraries found in the system ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted Thu Mar 31 14:29:27 2016 NOTE: ASM client jtpsoft1:jtpsoft disconnected unexpectedly. NOTE: check client alert log. NOTE: Trace records dumped in trace file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_ora_14024932.trc ASM Health Checker found 1 new failures Thu Mar 31 14:29:30 2016 ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted ERROR: ORA-15130 in COD recovery for diskgroup 3/0x46ed7239 (GJJ_DG) ERROR: ORA-15130 thrown in RBAL for group number 3 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_rbal_12386526.trc: ORA-15130: diskgroup "" is being dismounted Thu Mar 31 14:29:37 2016 GMON dismounting group 3 at 12 for pid 31, osid 30277648 Thu Mar 31 14:29:37 2016 NOTE: Disk GJJ_DG_0000 in mode 0x7f marked for de-assignment NOTE: Disk GJJ_DG_0001 in mode 0x7f marked for de-assignment NOTE: Disk GJJ_DG_0002 in mode 0x7f marked for de-assignment SUCCESS: diskgroup GJJ_DG was dismounted SUCCESS: alter diskgroup GJJ_DG dismount force /* ASM SERVER:1189966393 */ SUCCESS: ASM-initiated MANDATORY DISMOUNT of group GJJ_DG Thu Mar 31 14:29:37 2016 NOTE: diskgroup resource ora.GJJ_DG.dg is offline Thu Mar 31 14:29:44 2016 Errors in file /opt/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_ora_15073504.trc: ORA-17503: ksfdopn:2 Failed to open file +GJJ_DG/jtpsoft/spfilejtpsoft.ora ORA-15001: diskgroup "GJJ_DG" does not exist or is not mounted

ASMCMD> lsdg State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED NORMAL N 512 4096 1048576 614400 606078 204800 200639 0 N BACK_DG/ MOUNTED NORMAL N 512 4096 1048576 10240 9310 2048 3631 0 Y CRS_DG/ MOUNTED NORMAL N 512 4096 1048576 1228800 858311 409600 224355 0 N GJJ_DG/

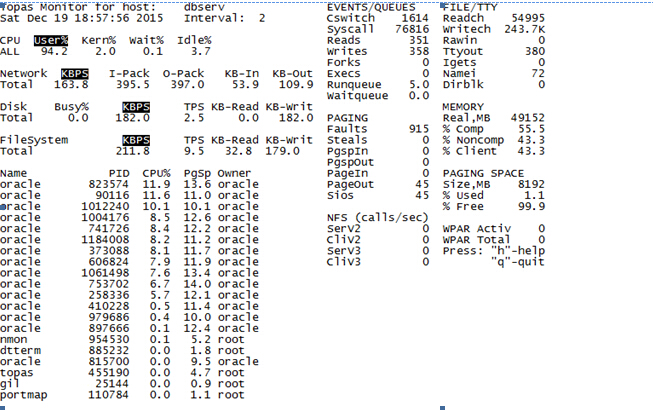

从上面的信息’Thu Mar 31 14:29:18 2016’与’WARNING: Waited 15 secs for write IO to PST disk 1 in group 3’可知在2016-03-31 14:29:18这个时间点,由于对ASM正常或高冗余磁盘所执行的ASM PST心跳检测出现了延迟,而且延迟时间超过了15秒,因此ASM实例dismount了ASM磁盘组。这种心跳方式会忽略对外部冗余磁盘组的检测,在ASM PST重新验证之前ASM实例会停止执行更多的PST心跳检测,但PST心跳延迟不会dismount外部冗余磁盘组。可能出现这种情况有以下几种原因:

1.由于多路径设备的某些物理路径脱机或丢失

2.多路径执行路径故障转移

3.服务器负载或存储/多路径/操作系统的维护,但这种情况可以排除因为负载不高,也没进行任何维护操作。

那么在出现这种情况时,可以通过以下几种方式来解决:

1.检查操作系统和存储对磁盘管理的响应时间

2.尽可能把磁盘的响应时间维持在15秒以下,但这将处于了操作系统,多路径软件与内核参数等多种因素,需要一一排查。

3.如果不能保证磁盘的响应时间维持在15秒以下,那么可以对ASM实例设置隐含参数_asm_hbetaiowait,对于这个参值数如果遇到bug 17274537可以设置120,并且这个bug在12.1.0.2中被修复了。

Thu Mar 31 14:30:05 2016

SQL> ALTER DISKGROUP GJJ_DG MOUNT /* asm agent *//* {0:23:23972} */

NOTE: cache registered group GJJ_DG number=3 incarn=0x46ed72a8

NOTE: cache began mount (not first) of group GJJ_DG number=3 incarn=0x46ed72a8

NOTE: Assigning number (3,0) to disk (/dev/rhdiskpower5)

NOTE: Assigning number (3,1) to disk (/dev/rhdiskpower6)

NOTE: Assigning number (3,2) to disk (/dev/rhdiskpower7)

Thu Mar 31 14:30:05 2016

GMON querying group 3 at 14 for pid 27, osid 29163580

NOTE: cache opening disk 0 of grp 3: GJJ_DG_0000 path:/dev/rhdiskpower5

NOTE: F1X0 found on disk 0 au 2 fcn 0.0

NOTE: cache opening disk 1 of grp 3: GJJ_DG_0001 path:/dev/rhdiskpower6

NOTE: F1X0 found on disk 1 au 2 fcn 0.0

NOTE: cache opening disk 2 of grp 3: GJJ_DG_0002 path:/dev/rhdiskpower7

NOTE: F1X0 found on disk 2 au 2 fcn 0.0

NOTE: cache mounting (not first) normal redundancy group 3/0x46ED72A8 (GJJ_DG)

Thu Mar 31 14:30:05 2016

kjbdomatt send to inst 2

Thu Mar 31 14:30:05 2016

NOTE: attached to recovery domain 3

NOTE: redo buffer size is 256 blocks (1053184 bytes)

Thu Mar 31 14:30:05 2016

NOTE: LGWR attempting to mount thread 2 for diskgroup 3 (GJJ_DG)

NOTE: LGWR found thread 2 closed at ABA 22.4306

NOTE: LGWR mounted thread 2 for diskgroup 3 (GJJ_DG)

NOTE: LGWR opening thread 2 at fcn 0.383934 ABA 23.4307

NOTE: cache mounting group 3/0x46ED72A8 (GJJ_DG) succeeded

NOTE: cache ending mount (success) of group GJJ_DG number=3 incarn=0x46ed72a8

Thu Mar 31 14:30:05 2016

NOTE: Instance updated compatible.asm to 11.2.0.0.0 for grp 3

SUCCESS: diskgroup GJJ_DG was mounted

SUCCESS: ALTER DISKGROUP GJJ_DG MOUNT /* asm agent *//* {0:23:23972} */

从上面的信息可以看到在 2016-03-31 14:30:05这个时间点asm_agent在执行mount磁盘组(GJJ_DG),在14:29:18 dismount磁盘组到14:30:05 mount磁盘之间的时间间隔是47秒。所以等我检查时ASM磁盘组(GJJ_DG)已经mount成功了,于是只能让客户去检查操作系统,多路径软件和光纤。